3. Understanding Spatial Indexes

A quick note about the practicals...

Remember that coding should be done in Spyder using the understandinggis virtual environment. If you want to install the environment on your own computer, you can do so using the instructions here.

You do not have to run every bit of code in this document. Read through it, and have a go where you feel it would help your understanding. If I explicitly want you to do something, I will write an instruction that looks like this:

This is an instruction.

Shortcuts: Part 1 Part 2

In which we go even deeper into Python programming, and learn how to optimise our spatial queries using a spatial index

Part 1

Throughout the past two weeks we have made use of functions in Python (e.g. print(), read_file() g.inv() etc. ). Now we are going to learn to make them for ourselves…

Functions in Python

A Function is simply a bit of code that does something (e.g. print() prints something to the console, read_file() reads a Shapefile into a GeoDataFrame and g.inv() works out the ellipsoidal distance between two locations). Functions are a very useful part of a programmers toolkit, as it means that you never have to repeat a line of code, you can simply write a function once and call it as many times as you like!

In week 2 I told you about a (relatively) simple calculation to get a spherical distance called the spherical cosine method. Below is a Python function that calculates a distance between two points using the spherical cosine method:

Just look at it for now and try to understand it (how the function works, not the maths!). There is no need to do any copying and pasting just yet!

from math import radians, acos, cos, sin

def spherical_distance(x1, y1, x2, y2):

"""

This is a special kind of comment used to describe what a function does.

This one calculates the distance between two locations along a sphere using the Spherical Law of Cosines.

"""

# Average radius of WGS84 spherical Earth model in m

R = 6371008

# convert to radians

a = radians(y1)

b = radians(y2)

c = radians(x2 - x1)

# calculate the spherical distance

distance = acos(sin(a) * sin(b) + cos(a) * cos(b) * cos(c)) * R

# return the distance

return distance

Using a function is referred to as calling it. When you call the above function, you pass it some values called arguments (in this case there are 4 arguments: x1, y1, x2, y2). The function processes those values and returns another value (as specified by the return statement in the function definition - in this case, the distance between the two locations described by the four arguments).

To call this function, you would use:

distance = spherical_distance(-2.79, 54.04, -2.75, 54.10)

This will calculate the distance between -2.79, 54.04 and -2.75, 54.10 and store the result in a variable called distance.

Constructing the function

Let’s break that function definition down a little:

Remember - don’t copy and paste anything unless I explicitly tell you to do so!

First, we import some mathematical functions that are required - these come from the math library. This would not normally happen inside the function, but rather at the top of the Python file that contains it (the same place that we always put our import statements!):

from math import radians, acos, cos, sin

Then, we define the function using the keyword def (short for define) - this is where we give it a name and define the list of arguments that it should receive in some parentheses (()). If multiple arguments are required, then we simply separate them by commas (,); if no arguments are required, simply put some empty parentheses (()). Finally, we use a colon (:) to let the python interpreter know that the indented code block that follows is what the function should do (just like in a for loop). As described above, in this case we will use four arguments, describing two coordinate pairs:

def spherical_distance(x1, y1, x2, y2):

The next section should be indented from the definition line (as it is the code block that will run when the function is called - this is just the same as the code block that we used inside our for loops last week). The python interpreter will consider everything that follows the : to be part of the function until it is no longer indented, like this:

def spherical_distance(x1, y1, x2, y2):

# part of the function...

# ...still part of the function...

# ...once again, part of the function...

# ...not part of the function!

You indent things in Python using the Tab button (which might be marked as ⇥ on your keyboard). The indented code block handles the maths to calculate the distance, storing the ultimate result in a variable called distance:

# calculate spherical distance and return

distance = acos(sin(a) * sin(b) + cos(a) * cos(b) * cos(c)) * R

Finally, we return the resulting distance using the return keyword:

# return the distance

return distance

Note that functions do not have to have a return statement. For example, print() is a function that does not return any values (it just prints something to the console), so there will be no return statement in print().

Calling the function

Now that we have a working function, we can call the function to perform a distance calculation. To achieve this, we simply write the name of the function followed by parentheses (()) containing the required arguments (they can be empty if no arguments are required, and they are separated by commas if several are required). If the function returns a value, then we can store that result in a variable using the assignment operator (=) in the normal way:

# calculate the distance between two locations

distance = spherical_distance(-2.79, 54.04, -2.75, 54.10)

# print the value that was returned

print(distance)

The value that was identified by the return keyword is the one that is stored in the distance variable.

Remember that I didn’t ask you to copy and paste any of that, if you did, delete it!

Don’t worry if that seems like a lot to take in, once you start writing functions for real it will all become perfectly clear! Let’s get started right now…

Create a new Python file:

./week3/week3.py

Create a function for measuring distance using the Pythagorean Theorem, based upon the below template - functions should be located at the top of your code, but below any

importstatements. You will need this in Part 2 so make sure you keep a copy of it!

# THIS IMPORTS A SQUARE ROOT FUNCTION, WHICH IS A BIG HINT!!

from math import sqrt

def distance(x1, y1, x2, y2):

"""

* Use Pythagoras' theorem to measure the distance. This is acceptable in this case because:

* - the CRS of the data is a local projection

* - the distances are short

* - computational efficiency is important (as we are making many measurements)

"""

return # complete this line to return the distance between (x1,y1) and (x2,y2)

Hint: if you get stuck, remember that the material from Week 2 might help you out!

Warning: When running copied and pasted code, you might get an error that looks like this:

unindent does not match any outer indentation level

This means that the indentations in your code are a mixture of spaces and tabs, which is confusing the Python interpreter. Fortunately, this is an easy fix - you can either:

- Get Spyder to do it for you by selecting Source→Fix Indentation

- Manually replace the offending space-based indents with tabs (just delete the indentation, and then tab the line back across to where it is supposed to be). Remember that the error message will tell you which line is causing the problem, and that you might have to replace a few lines before it starts working (normally all of the lines that you just pasted).

Either way should work fine! Be sure to remember this - it will come up relatively often during your Python career - it is the price we pay for the simple indent-based syntax (and also for copy-pasting code from the web…)!

Test your function to get the coordinates between

345678, 456789and445678, 556789to make sure that it works correctly

You should get something like the following result:

141421.35

If so, then great! Your function works!!

And now, back to the lecture…

Part 2

This week we are going to have a look at research being undertaken in department, which looks into the optimisation of well locations (for drinking water) in Northern Uganda in order to reduce inequalities in access to clean water. In Uganda, you might refer to these as boreholes (shown here, with a former PhD student trying to hide behind a stick…), but for simplicity we’ll refer to them as wells throughout:

We are going to undertake one part of the analysis involved in this work, which is to calculate the mean distance that anyone has to walk to get to the nearest well within their district. This is important because water supply in Uganda is managed at district level, and so the analyses need to reflect this.

As map data are scarce in this region, we will do this making some assumptions and accepting some caveats:

- in the absence of a complete network dataset, distances will be measured ‘as the crow flies’. This is not an unreasonable assumption here because:

- the distances are generally quite small so the difference will generally also be quite small

- many people do not travel along the network, but will go through fields etc to collect water. For this reason, a network distance can actually overestimate distances

- people’s location will be calculated using a population data model made available by Facebook Labs. This is probably the best available population data for this region, but is certainly not perfect.

- existing well locations will be taken from a dataset made available by the Ugandan Bureau of Statistics. We know that this is incomplete, but it is (again) the best available. This dataset covers a much larger area, and so will need to be cut down to the district first.

Following our discussion in the lecture, we know that there are several ways that we could approach this task, but the volume of data that we are dealing with dictates that we should make use of a spatial index (courtesy of the rtree library) in order to make our calculations as efficient as possible.

Before we do that though - we need to take a moment to consider our data…

Matching up the CRS’

As ever, before we embark on any type of GIS analysis, we need to consider the CRS. This is especially important in this case, because we are going to be making our distance calculations using projected coordinates. Distortions in the projection will therefore significantly affect our results if we are not careful. We therefore need to make sure that all of our datasets are using the same CRS, and that the CRS is appropriate for our area of interest (Northern Uganda).

Before we do anything - we should really have a look at what CRS’ the data are already using. Fortunately, geopandas makes this very easy for us. As we already know, when we open a dataset using read_file(), we get a GeoDataFrame object back, which includes a set of properties and functions. Amongst these are everything that we need to check the CRS of our dataset (with the .crs property) and change the CRS of the dataset (with the .to_crs() function).

Firstly, let’s take a look at our data, add the below snippets to your code to load the three datasets (note that the

distance()function that you made in part 1 should also be in this file!):

from geopandas import read_file

# read in shapefiles, ensure that they all have the same CRS

pop_points = read_file("../data/gulu/pop_points.shp")

water_points = read_file("../data/gulu/water_points.shp")

gulu_district = read_file("../data/gulu/district.shp")

Now, use the

print()function to print out thecrsof each of the three datasets (e.g.pop_points.crs). Are they the same? To make them easier to read, you can print a blank line between each of them by adding an emptyprint()statement.

Hopefully you can see that they are not all the same, and you should get three rather different definitions. Depending on your system, the output of this will look different - on Windows machines it often looks like this:

epsg:21096

epsg:4326

epsg:21096

Whereas on my machine you might get something more like this:

PROJCS["Arc_1960_UTM_zone_36N",GEOGCS["GCS_Arc 1960",DATUM["Arc_1960",SPHEROID["Clarke 1880 (RGS)",6378249.145,293.465,AUTHORITY["EPSG","7012"]],AUTHORITY["EPSG","6210"]],PRIMEM["Greenwich",0],UNIT["Degree",0.0174532925199433],AUTHORITY["EPSG","4210"]],PROJECTION["Transverse_Mercator"],PARAMETER["latitude_of_origin",0],PARAMETER["central_meridian",33],PARAMETER["scale_factor",0.9996],PARAMETER["false_easting",500000],PARAMETER["false_northing",0],UNIT["metre",1,AUTHORITY["EPSG","9001"]],AXIS["Easting",EAST],AXIS["Northing",NORTH]]

epsg:4326

epsg:21096

NOTE: The way in which these definitions are presented will vary from system to system, and so your output might not be exactly the same as either of the above - this does not matter, the key thing to notice is that they are represented differently, and so difficult to compare!

In this latter example, the first one is a WKT String (just like the ones that we looked at in Week 1; and that we can get from the Projection Wizard). The other two are EPSG Codes, which refers to a numeric code defined in a database managed by the European Petroleum Survey Group - the code can be used to search the database (which is built into GeoPandas and most major GIS software) and return the parameters that describe the CRS. In all, we now therefore know about three different ways to represent CRS’:

- EPSG Codes

- Projection Strings

- WKT Strings

There are more as well, but these three are the most common. To make the CRS’ easier to compare, we can specify which form we would like our output to be in using these functions:

.to_epsg()- represent the CRS as an EPSG code.to_proj4()- represent the CRS as a Projection String.to_wkt(): represent the CRS as a WKT String

These functions are all member functions of the CRS Object, and so you use them by simply appending the function to the .crs in each of your statement (as .crs is a property of the GeoDataFrame Object that provides an Instance of a CRS Object that represents the CRS of your GeoDataFrame).

Use one of the functions to allow you to compare the three CRS’ using the WKT format

Here is what you should expect to get back from using .to_wkt():

PROJCRS["Arc_1960_UTM_zone_36N",BASEGEOGCRS["GCS_Arc 1960",DATUM["Arc 1960",ELLIPSOID["Clarke 1880 (RGS)",6378249.145,293.465,LENGTHUNIT["metre",1]]],PRIMEM["Greenwich",0,ANGLEUNIT["Degree",0.0174532925199433]],ID["EPSG",4210]],CONVERSION["UTM zone 36N",METHOD["Transverse Mercator",ID["EPSG",9807]],PARAMETER["Latitude of natural origin",0,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8801]],PARAMETER["Longitude of natural origin",33,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8802]],PARAMETER["Scale factor at natural origin",0.9996,SCALEUNIT["unity",1],ID["EPSG",8805]],PARAMETER["False easting",500000,LENGTHUNIT["metre",1],ID["EPSG",8806]],PARAMETER["False northing",0,LENGTHUNIT["metre",1],ID["EPSG",8807]],ID["EPSG",16036]],CS[Cartesian,2],AXIS["easting",east,ORDER[1],LENGTHUNIT["metre",1,ID["EPSG",9001]]],AXIS["northing",north,ORDER[2],LENGTHUNIT["metre",1,ID["EPSG",9001]]]]

GEOGCRS["WGS 84",DATUM["World Geodetic System 1984",ELLIPSOID["WGS 84",6378137,298.257223563,LENGTHUNIT["metre",1]]],PRIMEM["Greenwich",0,ANGLEUNIT["degree",0.0174532925199433]],CS[ellipsoidal,2],AXIS["geodetic latitude (Lat)",north,ORDER[1],ANGLEUNIT["degree",0.0174532925199433]],AXIS["geodetic longitude (Lon)",east,ORDER[2],ANGLEUNIT["degree",0.0174532925199433]],USAGE[SCOPE["unknown"],AREA["World"],BBOX[-90,-180,90,180]],ID["EPSG",4326]]

PROJCRS["Arc 1960 / UTM zone 36N",BASEGEOGCRS["Arc 1960",DATUM["Arc 1960",ELLIPSOID["Clarke 1880 (RGS)",6378249.145,293.465,LENGTHUNIT["metre",1]]],PRIMEM["Greenwich",0,ANGLEUNIT["degree",0.0174532925199433]],ID["EPSG",4210]],CONVERSION["UTM zone 36N",METHOD["Transverse Mercator",ID["EPSG",9807]],PARAMETER["Latitude of natural origin",0,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8801]],PARAMETER["Longitude of natural origin",33,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8802]],PARAMETER["Scale factor at natural origin",0.9996,SCALEUNIT["unity",1],ID["EPSG",8805]],PARAMETER["False easting",500000,LENGTHUNIT["metre",1],ID["EPSG",8806]],PARAMETER["False northing",0,LENGTHUNIT["metre",1],ID["EPSG",8807]]],CS[Cartesian,2],AXIS["(E)",east,ORDER[1],LENGTHUNIT["metre",1]],AXIS["(N)",north,ORDER[2],LENGTHUNIT["metre",1]],USAGE[SCOPE["unknown"],AREA["Africa - Kenya and Uganda - north of equator and 30°E to 36°E"],BBOX[0,29.99,4.63,36]],ID["EPSG",21096]]

Now, use

.to_epsg()to get the EPSG code for each of them

21096

4326

21096

Interestingly, two of them seem to have the same EPSG code, but slightly different WKT definitions, so it is clear that we need to be careful even when they appear to be the same!

Let’s see what they all mean: You can look up pre-existing projections by their code in the EPSG database using websites such as epsg.io. Simply type in the number (omitting the epsg: part) and it will show you the details of the corresponding CRS:

Examining the CRS of pop_points and gulu_district:

Find the details for the CRS with the EPSG code 21096

If all has gone to plan, you should be looking at the details of a CRS called Arc 1960 / UTM zone 36N. This is part of the Universal Transverse Mercator (UTM) system, in which the majority of the surface of the Earth is divided up into 1,144 zones that are (mostly) in a 60 × 20 grid of local projections designated by a code in the order column, row, where the column is a number between 1 and 60 and the row is a letter between C and X. Here are the zones that cover Africa (click here to see the full grid):

Source, Wikipedia

As you can see, your projection is UTM zone 36N, which you can confirm covers Northern Uganda in the above image. We are lucky that our area of interest falls entirely within one UTM zone - as you can see, they rarely align with countries. As we saw in the lecture, UTM zone 36N is a local conformal projection that is quite similar to what we might have made using the Projection Wizard.

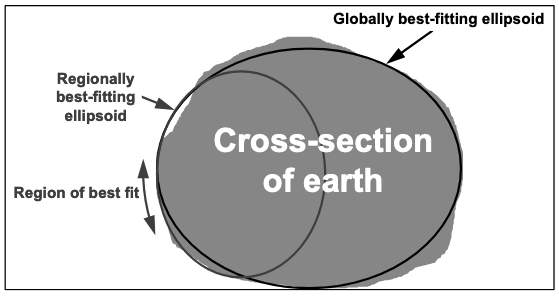

Note also that the full name of this projection is Arc 1960 / UTM zone 36N. The Arc 1960 part refers to the datum that the CRS uses (this is the reference frame that maps an ellipsoid to the Earth - in this case, the ellipsoid is Clark 1880). Like the projections that are built on top of them, datums also have local and global variants. You will remember that we have so far used the WGS84 datum, because this is a global datum, meaning that it is generally a good fit for global analysis (i.e. it is a generally good fit for the geoid at a global scale). For work concentrated in Africa, a local datum called Arc 1960 is a better fit (i.e. it is a better fit for the geoid at the location of Africa, but a poor fit elsewhere) - hence it is the one that we will use here. For working in the UK, OSGB 36 datum, which is based on the Airy 1830 ellipsoid is a better fit (it is designed specifically to use in the UK only), and this is why it is used in the Ordnance Survey’s British National Grid. The difference between a global ellipsoid and a regional ellipsoid is illustrated below:

Source: Ordnance Survey

It is important to be aware of the difference between global and local datums, and that the choice of datum is an important consideration where high levels of accuracy are needed. However, be aware that this is not currently supported by the Projection Wizard, which always defaults to WGS84.

For reference, if you scroll down a little on the epsg.io page, you will see a variety of ways that you can export your chosen projection, including Projection Strings (listed as Proj.4) and WKT Strings (listed as OGC WKT). In this case, we do not need them, as we already have the CRS stored in our pop_points object!

Examining the CRS of water_points:

For completeness, now look up the details for the CRS with the EPSG code 4326

This is good old WGS84 CRS. It is a source of great confusion that WGS84 is the name of an ellipsoid and a 3-dimensional CRS (i.e. one using longitude and latitude) based on that ellipsoid - so don’t get confused!

Matching up the CRS’ of our datasets:

We can plainly see that these datasets are all stored using different CRS’. This is not good for analysis, particularly as they area a mixture of geographical (3-dimensional, measured in degrees - WGS 84) and projected (2-dimensional, measured in metres - Arc 1960 / UTM zone 36N) CRS’. We therefore want to make it so that they all match up, which we can do using the .to_crs() function of the GeoDataFrame object. Here is an example:

# read in the `water_points` dataset AND transform it the the same CRS as `pop_points`

water_points = read_file("../data/gulu/water_points.shp").to_crs(pop_points.crs)

Based on the above example, set all of your layers to the same CRS

Check that this has worked by printing the CRS of all three again. They should all now be the same!

Once you are satisfied, remove all of your

print()statements relating to CRS’

Building a Spatial Index

A Quick note on Spatial Indexes and GeoPandas

Interestingly, GeoPandas actually has spatial index functionality already built into it via the pygeos library, and will use a spatial index for you in order to help with some topological operations on GeoDataFrame / GeoSeries objects. However, this is a higher level implementation that hides some of the detail. Whilst convenient for a general geopandas user, this approach is not a great way to understand what a spatial index is, nor how one is used; which is the main purpose of this course. This is why we are choosing to create our own using rtree. If you want to know more about the GeoPandas Spatial Index, you can do so here. Additionally, later in the course you will need to build spatial indexes for datasets that cannot be stored in a GeoDataFrame, so understanding this from the outset really is valuable!

Building your own Spatial Index with rtree

Now we are happy that our data is ready for use, we can make a start with our analysis.

The first thing that we need to do is to remove all of the points from water points that are outside of our area of interest ( which is defined by Gulu District). This should be simple enough based upon our knowledge of Topology from last week: we simply need to extract all of the points that are within the district polygon, and discard the rest.

Once we have done that, our second job will be to work out the nearest feature in well_points (well) to each feature in pop_points (house) and calculate the distance between them using the distance() function that we created earlier. We can then map those differences and see what patterns emerge!

Both of these sound quite simple on the face of it, and they are. The problem only arises from the volume of data with which we are dealing:

Add the below snippet to your code to see how many wells and population points we have:

print(f"population points: {len(pop_points.index)}")

print(f"Initial wells: {len(water_points.index)}")

Note the form f"...". You have seen statements like this before in this course - they are called f-strings (formally, formatted string literals), and are a simple way to combine python functions with text output. You simply add an f to the start of the string definition, and then you can put Python statements inside curly braces { } inside the string. At the time that the string is used, the python values are calculated, and the results are added into the string - Simple! You can read more about f-Strings here.

If you run the above print() statements, you should see that we have 3,000 wells and over 42,702 population points - that would mean 3,000 within queries and over 128 million distance calculations…!

Whilst this is certainly possible, it would be extremely inefficient and will take a very long time. The solution, as we saw in the lecture, is to use a Spatial Index in order to reduce the number of calculations that we need to do to answer this questions, and therefore make our program much more efficient. We will do this using the excellent rtree library for Python, which implements R-Tree spatial indexes (after Guttman, 1984) and is indispensable in helping us to speed up our analyses.

Add the below two snippets to your code at the appropriate locations in order to construct a spatial index on the

water_pointsdataset using thertreelibrary:

from rtree.index import Index

# initialise an instance of an rtree Index object

idx = Index()

# loop through each row in the wells dataset and load into the spatial index

for id, well in water_points.iterrows():

idx.insert(id, well.geometry.bounds)

This short statement simply creates a new spatial index object (stored in the idx variable) and then loops through each row in our water_points dataset, inserting the index (a unique identifier) and coordinates describing the bounding box (the smallest rectangle aligned to the axes of the CRS that contain the feature) of each feature into idx. Note that the unique identifier that we use is simply the row index from the GeoDataFrame (0, 1, 2, 3 etc…), and that we calculate the bounds by accessing the geometry column of the current row (.geometry, which returns an instance of a shapely geometry object), and then using the .bounds property of that object, which provides a tuple containing the coordinates of the bounding box of the geometry:

Once the loop is complete, we have a spatial index!!

Take note of how that

forloop works by looking it over carefully and reading the below - you will need it later on in the practical!!

Looping through GeoDataFrames

Consider this line again:

for id, well in water_points.iterrows():

Because of the complexity of the GeodataFrame data format, you cannot simply loop through it as you could through a list or any other ‘iterable’ object. Instead, you have to use its .iterrows() function to return a data structure that you can loop through.

When you use this approach, .iterrows() returns two values in each iteration of the loop (as opposed to the one value that we were getting last week). For this reason, we have two variables in our for statement: id and well (remember that these are variables so you can call them whatever you like):

idcontains the index of the dataset - this is a unique identifier for each line, and by default will simply be a series of numbers (0,1,2,3etc…). This is the number that.iloc(index location) was using last week.wellcontains the row of data in the loop, giving you access to all of the columns including thegeometry.

Make sure that this makes sense to you. If you don’t get it - ask!

This is really important, simply attempting to loop through the GeoDataFrame as if it were a list will not cause an error - it will simply loop through all of the column names in the dataset (which is very different to looping through every row in the dataset!). For example, if you did this:

# incorrectly attempt to loop through a GeoDataFrame

for well in water_points:

print(well)

…then you would get this:

DISTRICTNA

COUNTYNAME

SUBCOUNTYN

PARISHNAME

VILLAGENAM

FNAME

FCODE

FTYPE

EASTING

X

NORTHING

Y

ALTITUDE

REMARKS

geometry

This is clearly not what you want, but you would not believe how often people make this mistake and don’t notice!! This is something you must remember: if you want to loop through a GeoDataFrame, you must use .iterrows().

Optimising Intersections with a Spatial Index

Now we have our spatial index, we can put it to work by making it help us to filter our wells dataset to exclude all wells that are outside of the Gulu District (which is defined by the polygon stored in district).

This is how the process will work:

- Get our spatial index representing wells

- Use it to very quickly return the index (ID) numbers of all of the wells that are contained within the bounding box of

district - Query the

GeoDataFrameusing.ilocto return the rows that match that list of index (ID) numbers - Use a topological

withintest to assess whether each geometry in the resulting (much smaller) dataset is inside the district or not. - Re-build your spatial index to only include those features that are

withinthedistrictpolygon.

The result of this approach is that we only need to do a within query on 1,002 geometries, rather than the full 3,000, meaning that the operation will only take about a third of the time to run. Pretty good eh!

Let’s give it a go:

Paste the below four snippets into your code to utilise the spatial index, making sure that you fully understand each step:

1: Retrieve the polygon geometry using .iloc[0] and report the number of wells in the dataset using a print() statement and f-string:

# get the one and only polygon from the district dataset

polygon = gulu_district.geometry.iloc[0]

# how many rows are we starting with?

print(f"Initial wells: {len(water_points.index)}")

2: Use the spatial index to return a list of the ID’s of the wells that intersect the bounds of the district polygon. Note that this is simply a list of the ID’s, not the features themselves!

# get the indexes of wells that intersect bounds of the district

possible_matches_index = list(idx.intersection(polygon.bounds))

3: Use the resulting list to query the water_points GeoDataFrame using .iloc (notice that this time we are passing a list of index numbers to it, rather than just a single one as in step 1). Report the number of wells that meet this criteria.

# use those indexes to extract the possible matches from the GeoDataFrame

possible_matches = water_points.iloc[possible_matches_index]

# how many rows are left now?

print(f"Filtered wells: {len(possible_matches.index)}")

Note that we work this out by printing the length of one column. In this case, we are using the index, which is the list of ID numbers that correspond to each row (0, 1, 2, etc.). Though any column would do, we normally use index as this is the column that is guaranteed to be in every single dataset!

4: Finally, use the smaller dataset to do the (slower but more precise) within query. This time, instead of a list of ID numbers ([1, 5, 17, etc...]) we get a list of Boolean values back ([True, False, True, True, etc...]), with one value for each row describing whether or not it is within the polygon or not. To extract the corresponding rows this time, we must therefore use .loc (which can take a Boolean input), rather than .iloc (which only takes a list of index numbers):

# then search the possible matches for precise matches using the slower but more precise method

precise_matches = possible_matches.loc[possible_matches.within(polygon)]

# how many rows are left now?

print(f"Filtered wells: {len(precise_matches.index)}")

Well done! you should now have a list of 744 wells in the Gulu District of Northern Uganda!

Optimising Nearest Neighbour with a Spatial Index

Now we have filtered our well dataset, it is time to do our actual analysis. To do this, we need to know the distance from each population point to its nearest well. Once we know this, we can easily work out the mean distance. We could do this by working out the distance from each population point to each well, and then take the minimum for each one. The downside is that this would be (42,702 × 744 =) 31,770,288 distance calculations, which would take quite a while!

Fortunately, we can reduce this substantially by once again leveraging the power of our Spatial Index. In this case, we are going to use the idx.nearest() function in order to determine the nearest neighbour of each of our population points, reducing the number of calculations from nearly 32 million to just 42 thousand. This is another huge gain in efficiency, as it avoids about 99.9% of the computation that we would otherwise have had to do!

Rebuilding out Spatial Index

However, before we can do anything with our Spatial Index, we need to stop and think for a second… We are now in the position where we have reduced the amount of data in our GeoDataFrame (now only 744 wells), but our Spatial Index no longer matches it (it still contains 3,000 wells). If we had completed our analysis and were not planning to make any more use of the Spatial Index, then this would be no problem, but in our case we are going to use it again, so we need to re-build it to make sure that it matches the GeoDataFrame.To do this, we simply overwrite the idx with a new Index object that reflects the contents of precise_matches instead of water_points.

Paste in the below snippet and add the missing lines to rebuild the index to only include the wells in

precise_matches, using exactly the same process as you did earlier:

# rebuild the spatial index using the new, smaller dataset

idx = Index()

# MISSING LINE OF CODE

# MISSING LINE OF CODE

Nearest Neighbour analysis with rtree

Don’t panic about the next step, you have done both of these things before, one of them today!! If you can’t remember what to do it is fine, you can use the hints page to find information on both defining an empty list and looping through a GeoDataFrame

Declare an empty

listin a variable calleddistancesOpen a

forloop that iterates through thepop_pointsdataset, using the variablesidandhouseto store the index and row respectively

Once you have done that, it is time to put a block of code inside your loop. We have three steps to complete for each population point:

- Get the index of the nearest well to the current population point (

house), extract the one and only value from the list - Use the resulting index number to get the relevant row using

.iloc - Measure the distance between the current population point and the nearest well, append the result to the

distanceslist that you initialised outside of the loop

Here’s how:

1: Get the index of the nearest well to the current population point:

# use the spatial index to get the index of the closest well

nearest_well_index = list(idx.nearest(house.geometry.bounds, 1))[0]

A lot is going on in this line:

idx.nearest(house.geometry.bounds, 1): use thenearestfunction of your spatial index to get the id of the nearest1points in the index to the specified location (which is defined by house.geometry.bounds`, because spatial indexes only think in terms of bounding boxes)- Convert the results to a list (of one item) using

list() - Extract the one and only result from the list using

[0](just as we would from any other list)

Note that this could be done across several lines of code, but as we will not be using any of the intermediate steps it is more elegant (and sometimes more efficient) to do it in a single line.

Add the above snippet to your loop (pay attention to the indentation to make sure that it is inside the loop block!)

2: As with idx.intersects(), the spatial index only gives us a list of the ID of the nearest well, not the row from the water_points dataset itself. Once again, we therefore need to get the corresponding row of data from the GeoDataFrame using your index and .iloc

# use the spatial index to get the closest well object from the original dataset

nearest_well = water_points.iloc[nearest_well_index]

Add the above snippet to your loop (pay attention to the indentation to make sure that it is inside the loop block!)

3: Measure the distance and append the result to your distances list:

# store the distance to the nearest well

distances.append(distance(house.geometry.bounds[0], house.geometry.bounds[1], nearest_well.geometry.bounds[0], nearest_well.geometry.bounds[1]))

Two things are going on here:

- We are measuring the distance using a function called

distance()- this is the function that you created in Part 1! - We are appending the result directly to our

distanceslist usingdistances.append()

Again, that these steps could be done across two separate lines of code (get distance then add to list), but as we will not use the distance outside of the context of the distances list this is a more elegant solution.

Add the above snippet to the loop (pay attention to the indentation to make sure that it is inside the loop block!) and run your code to make sure that it all works.

Look back over the code that you have so far and make sure that you understand how it works. In particular, note that you need to completely build the Spatial Index (by looping threough the

GeoDataFrame) and then use it afterwards, you can’t just build it as you go, which is a common error that people make!

A note on the Distance Function

In contrast to last week, where we were measuring a single, very long distance; this week we are measuring lots of very short distances. In order to enable us to do this efficiently, we decided not to use the most precise measurement technique (the inverse Vincenty method), but rather use the much more efficient approach of using the Pythagorean Theorem (measuring on a flat surface), which is less computationally intensive. This is particularly the case with data that are already projected, such as this one, as we would need to first de-project the data to 3-dimensional longitude-latitude coordinates before we could calculate the ellipsoidal distance!

We can get away with this because we have selected an appropriate local projection (which minimises distortion) and are measuring over sufficiently small distances (mostly <1km). The combination of these facts means that we can be confident that any error will be negligible.

Using our distance data

If all has gone to plan, you should now have a list of 42,702 distances (in metres). It doesn’t run instantly (we are still doing a lot of work here), but shouldn’t take too long. We don’t want to print this to the console as it is so large, but we can print the length of it like this:

print(len(distances))

and the first 5 entries like this:

print(distances[:5])

Try both of these to see if your distance data is as expected

Now we have a list of distances, we can add them to our pop_points dataset as a column - where each row in the column represents the distance to the nearest well for that house (in metres). We saw how to do this in week1, it is very simple:

# store distance to nearest well

pop_points['nearest_well'] = distances

Add the above snippet to your code and run it - then test if it works by printing the columns list for this dataset.

We can also use our list of distances calculate the mean distance, which is simply a matter of dividing the sum of all of the distances (sum(distances)) by the number of distances (len(distances)).

Calculate the mean distance and store it in a variable called

mean

Now, we can report some simple statistics about the distances using f-strings:

print(f"Minimum distance to water in Gulu District is {round(min(distances))}m.")

print(f"Mean distance to water in Gulu District is {mean}m.")

print(f"Maximum distance to water in Gulu District is {round(max(distances))}m.")

Add the above snippet to your code and run it

If all has gone to plan, you should have something like the following:

Minimum distance to water in Gulu District is 2m.

Mean distance to water in Gulu District is 863m.

Maximum distance to water in Gulu District is 10387m.

If so then well done - your analysis is complete!

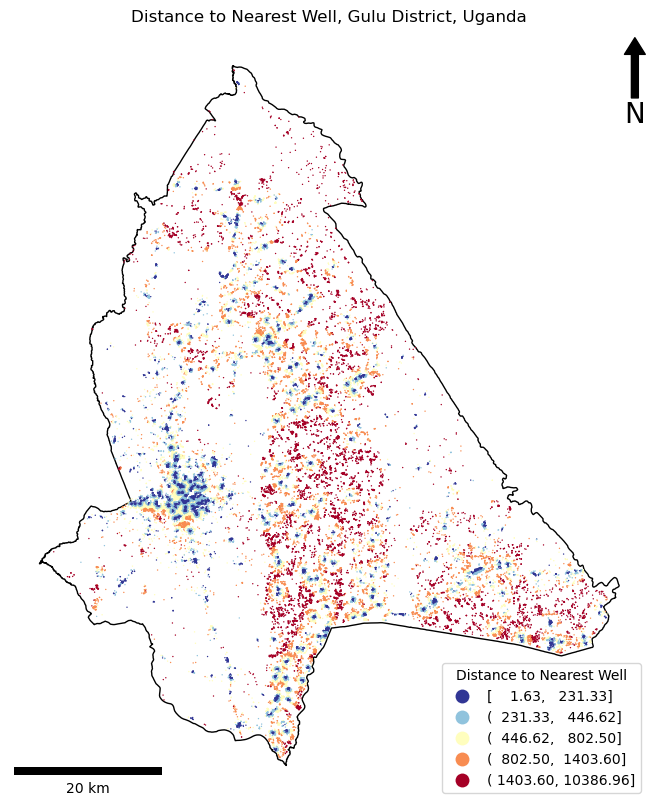

Plotting the Map

Now we have completed our analysis, it is time to map it! Plotting this map is nothing that you haven’t seen before in weeks 1 and 2, but be sure to have a read through and making sure that you understand it - as you will have to be able to plot maps on your own soon:

from matplotlib_scalebar.scalebar import ScaleBar

from matplotlib.pyplot import subplots, savefig, title

# create map axis object

fig, my_ax = subplots(1, 1, figsize=(16, 10))

# remove axes

my_ax.axis('off')

# add title

title("Distance to Nearest Well, Gulu District, Uganda")

# add the district boundary

gulu_district.plot(

ax = my_ax,

color = 'white',

linewidth = 1,

edgecolor = 'black',

)

# plot the locations, coloured by distance to water

pop_points.plot(

ax = my_ax,

column = 'nearest_well',

linewidth = 0,

markersize = 1,

cmap = 'RdYlBu_r',

scheme = 'quantiles',

legend = 'True',

legend_kwds = {

'loc': 'lower right',

'title': 'Distance to Nearest Well'

}

)

# add north arrow

x, y, arrow_length = 0.98, 0.99, 0.1

my_ax.annotate('N', xy=(x, y), xytext=(x, y-arrow_length),

arrowprops=dict(facecolor='black', width=5, headwidth=15),

ha='center', va='center', fontsize=20, xycoords=my_ax.transAxes)

# add scalebar

my_ax.add_artist(ScaleBar(dx=1, units="m", location="lower left", length_fraction=0.25))

# save the result

savefig('out/3.png', bbox_inches='tight')

print("done!")

Make sure that you understand the above two snippets. If so, add them to your script and run it. If not, ask!

If all has gone to plan, you should have something like this:

If so, Congratulations!! There are some very clear spatial patterns here that we could now further analyse in order to quantify the apparent patterns in the inequality of access to water.

One thing to pay attention to is the legend - the automated classification has surrounded the numbers with a mix of brackets (various combinations of () and []). Though some people think that this looks untidy - this is actually scientific notation for ranges:

[a, b]means that the range is inclusive - it includes the valuesaandb.(a, b)means that the range is exclusive - it does not include the valuesaandb.

From there you can start to mix and match. For example, (a, b] therefore means that the range includes all values greater than a (>a) and less than or equal to b (<=b). Simple!

Finally, demonstrate your understanding by having a play with different classification schemes and colour ramps.

Once you are happy that you understand how this all works, you’re done! This was a challenging week, so very well done and I’ll see you next week!

A little more…?

If you want to keep going:

Adjust your analysis so that it includes water points that are within 10.5km of the border of Gulu District

This is important, as the nearest water source for some people might actually be outside of the area! This is, after all, nothing more than an arbitrary political boundary - it will have little or no bearing on people’s behaviour. By limiting it to 10.5km (roughly the maximum distance anyone has to travel in the current model), we can still keep our analysis efficient, whilst ensuring that any relevant wells are included.

To achieve this - we simply need to buffer the geometry of the District (stored in polygon) by 10500m. This can be achieved using the .buffer() function included in Shapely (and so available on the geometry object that you extract from geopandas):

You will notice that this increases the number of wells in the analysis, but (in this case) only makes a very small difference to the mean result (it does not affect the maximum or minimum distance):

Before:

Initial wells: 3000

Potential wells: 1002

Filtered wells: 744

Minimum distance to water in Gulu District is 2m.

Mean distance to water in Gulu District is 864m.

Maximum distance to water in Gulu District is 10387m.

After:

Initial wells: 3000

Potential wells: 1458

Filtered wells: 975

Minimum distance to water in Gulu District is 2m.

Mean distance to water in Gulu District is 863m.

Maximum distance to water in Gulu District is 10387m.

Even more?

How many buildings are more than 5 km from the nearest well?

Considering the average household size in Uganda, how many people (approximately) have to travel more than 10 km to the nearest well?